地域中堅/中小市場におけるビジネス機会を最大化

大手ITソリューションベンダーは、いかにしてIDCのリサーチを活用し、

ニーズが多様化する国内地域ビジネスの戦略を確立したのでしょうか

背景

国内大手ITソリューションベンダーでは、国内各地域に支社/支店を配置し、地域の中堅および中小の企業/団体に向けたIT導入の提供/サポートに加え、地域経済の活性化に取り組んできました。

ニーズが多様化する地域ビジネスを展開する中で、ビジネス拡大に向けた地域事業計画や地域戦略策定だけでなく、急速な経済環境の変化に対応するために営業体制やデリバリー/サポート体制を見直す必要性が高まりつつあります。

-

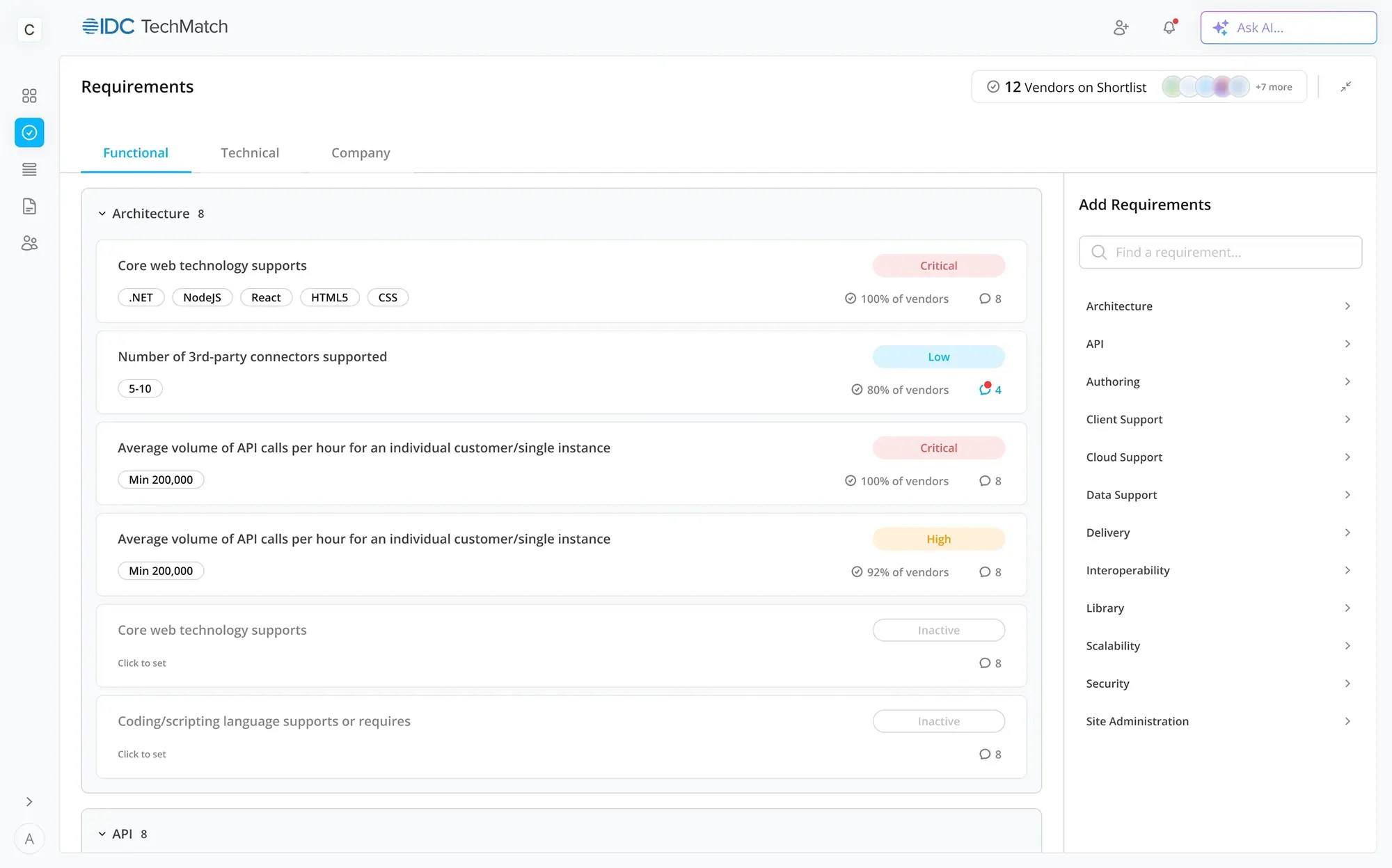

ソリューション

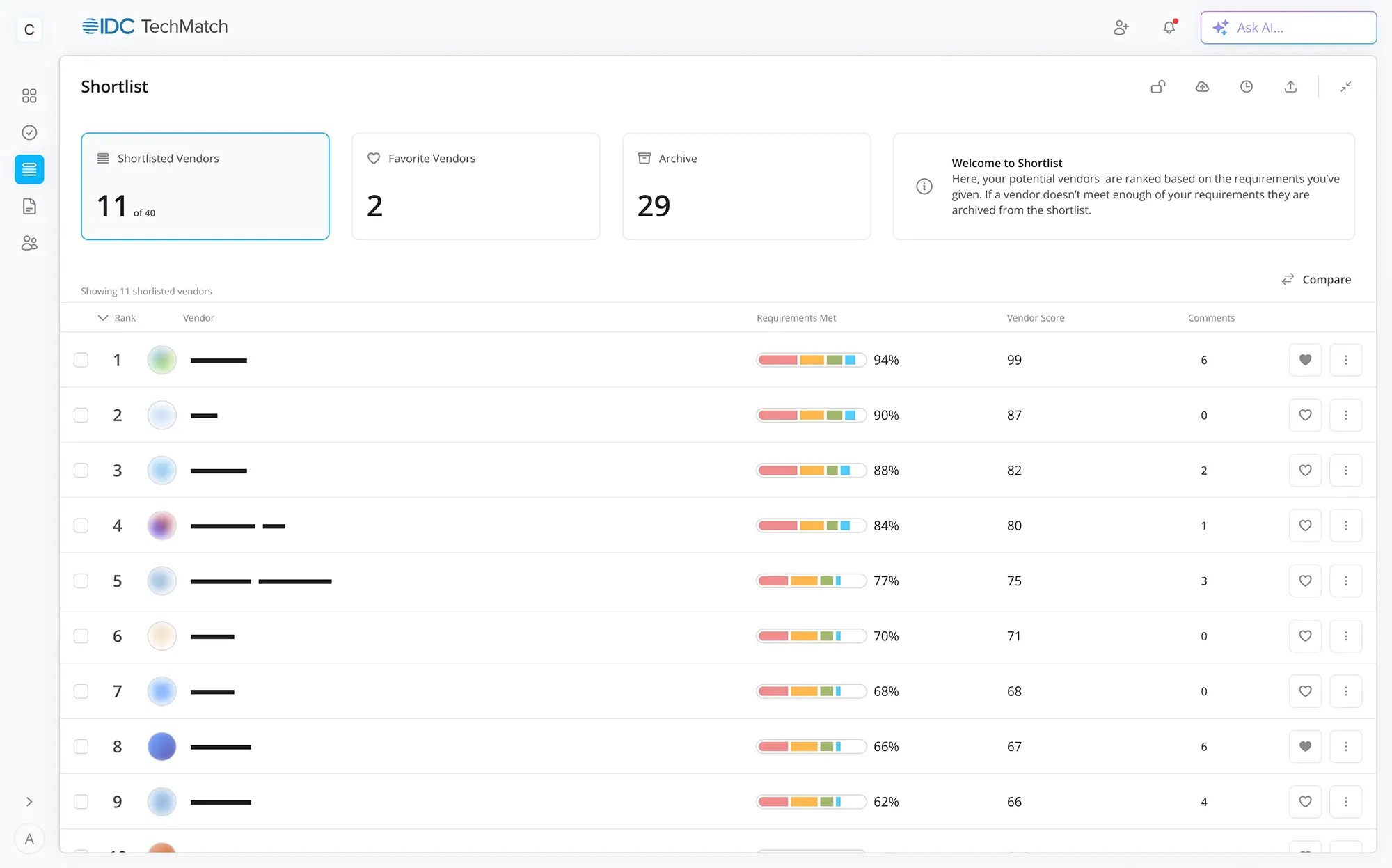

IDCは、ITソリューションベンダーの地域営業体制、提供ソリューションに合わせてカスタマイズした市場規模予測を提供し、注力分野や注力地域などの戦略面でのサポートも行い、ITソリューションベンダーはそれらを活用して体制の見直しや、どの地域に投資を増やすかなど投資計画を策定しました。

併せて、主な競合ソリューションベンダー、およびベンチマーク分析の対象となるベンダーの地域ビジネスの体制、戦略に関する情報を提供しました。この情報は、各地域の支社/支店がビジネスを展開し、戦術的なアクションを策定する際に役立ちました

-

成果

以前は、粒度の粗い区分のデータ、または自社のソリューション区分に合わない市場データを基に事業計画や戦略策定を行っていました。今回、現実のビジネスに結びつけられたIDCの市場データを利用することで、現実的な計画や戦略の策定を実施できました。

また、人員などを含めた地域体制を見直すことで、ビジネス活動を効率化し、売上拡大および利益率の向上につなげました。